The EU AI Act: Algorithmic Management Directive and Their Impact on HR Practices

Imagine this: You’re going in for a job interview, but before you even speak to a human being, a computer has already decided whether you’re a “yes” or a “no.” It sorted your CV, measured your tone of voice in a pre-recorded video, and ran predictive analytics on how likely you are to succeed.

Now, let’s take it a step further. What if the same kind of system monitored your daily performance, decided how your shifts were scheduled, nudged your behavior, and even evaluated your emotional state? Sounds like science fiction? It’s not. It’s happening (right now) in workplaces across Europe and beyond.

The rise of artificial intelligence and algorithmic management is transforming how we work, how we’re hired,

and how we’re managed. And while AI can offer efficiency, speed, and insights, it can also bring something else: bias, surveillance, and decisions made without accountability or explanation.

That’s why the European Union is stepping in with purpose. Two major legislative moves are now on the table: the EU AI Act, already adopted in 2024, and the Proposed Algorithmic Management Directive, still under discussion. These aren’t just policy tweaks; they are foundational shifts in how we protect workers, uphold dignity in the workplace, and ensure that technology serves humanity, not the other way around.

Understanding the EU AI Act

In August 2024, the European Union did something groundbreaking. It introduced the EU AI Act, the world’s first full-scale legal framework to govern artificial intelligence. This isn’t just a tech regulation, it’s a people-first law. One that dares to ask: How do we build trust in AI, while protecting the very human lives it touches?

Across Europe, AI isn’t some distant concept. It’s already reading résumés, scheduling shifts, tracking productivity, and shaping careers. The EU AI Act says: if we’re going to allow machines to make decisions about people’s lives, then we better do it right. Ethically. Transparently. And with clear guardrails.

The four levels of AI risk and why HR is in the hot seat

The EU AI Act doesn’t take a one-size-fits-all approach. Instead, it looks at risk and assigns responsibilities based on how much potential harm an AI system could do. Here’s how it breaks down:

| RISK LEVEL | DESCRIPTION |

| Minimal Risk | Basic AI tools with very low potential for harm. Examples include spam filters or AI that formats documents. These systems require minimal oversight and face few regulatory obligations. |

| Limited Risk | Transparency-focused. Users must be informed when interacting with AI (e.g., chatbots) or when content is AI-generated (e.g., deepfakes). Honesty and clarity are key. |

| High Risk | Significant implications for HR. Covers AI systems that: – Sort CVs and conduct video interviews – Monitor or supervise employees – Evaluate performance using analytics These systems handle sensitive data and must meet strict compliance standards to protect individuals’ rights. |

| Unacceptable Risk | AI systems that pose a clear threat to fundamental rights and are banned outright. This includes: – Emotion recognition in the workplace – Neuro-surveillance – Social scoring Such technologies are considered manipulative, invasive, and contrary to EU values. |

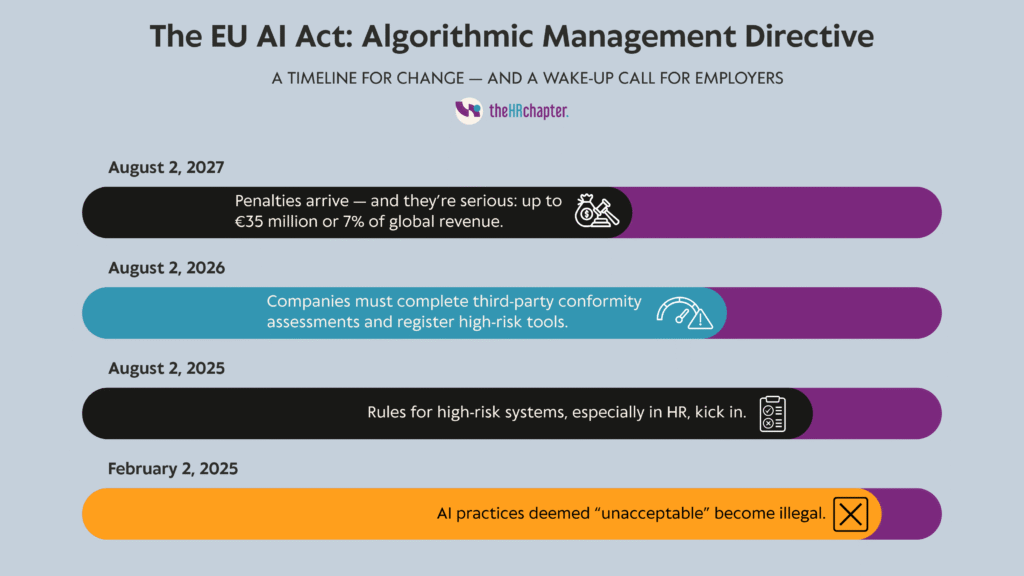

The EU is rolling this out gradually — but the clock is ticking:

For HR, compliance isn’t optional. It’s a responsibility

Let’s make this real. If your company is using AI to hire, manage, or assess people, here’s what the law now expects:

- Human Oversight: No more black-box decisions. A person (not a program) must be able to review and override automated choices.

- Data Governance: The data behind the AI must be clean, accurate, and fair. Employers must only collect what’s needed, and get explicit consent for anything extra.

- Documentation & Audits: Keep records. Lots of them. From how the system works to how it’s tested, documented compliance is now a legal requirement.

- Employee Training: Everyone who interacts with high-risk AI (especially HR staff) needs to be trained to understand what it does, how it works, and what to watch out for.

- At its heart, the EU AI Act is about balance: between innovation and protection, progress and accountability, efficiency and empathy. It’s about putting people first, even as machines become more powerful.

Because here’s the truth: technology may shape the future, but it’s humans who should shape technology.

The proposed Algorithmic Management Directive

Technology doesn’t just watch, it decides what we do, how we do it, and sometimes whether we do it at all. From freelancers accepting gig work to full-time employees under constant performance monitoring, workers across Europe are increasingly managed not by people, but by systems.

That’s why the European Union is stepping forward with something bold: the Proposed Algorithmic Management Directive. It’s a mouthful, but what it stands for is simple and powerful: workers deserve transparency, dignity, and a say in how they’re managed. Whether you’re an employee or a solo self-employed person, your livelihood shouldn’t be controlled by a black box.

What is Algorithmic Management, and why does it matter?

At its core, algorithmic management is when technology (including artificial intelligence) is used to:

- Monitor your work

- Supervise your output

- Evaluate your performance

- Or even make decisions that affect your job, your hours, your pay, or your future

This isn’t hypothetical. It’s real. Delivery drivers being rated by an app. Remote employees tracked by keystroke data. Gig workers accepting or losing tasks based on opaque algorithms.

The Proposed Directive, published in June 2025 by the European Parliament’s Committee on Employment and Social Affairs, acknowledges something critical: existing laws like the GDPR don’t go far enough. And even the EU AI Act (as comprehensive as it is) doesn’t fully protect workers’ rights in the context of AI at work. This directive is designed to close that gap.

What the directive proposes: rights, oversight, and respect

Let’s break down what this proposal is all about — and why it matters for anyone working in an AI-driven environment:

| Provision | Purpose | Description |

| Right to Explanation & Transparency | Empower workers with clarity and control over AI-driven decisions | Workers must receive clear, understandable explanations (not just technical jargon) about how algorithmic systems influence decisions related to schedules, pay, performance, or employment status. Transparency around the logic and data behind those decisions builds trust and accountability. |

| Worker Consultation on AI Tools | Ensure participatory decision-making and protect collective rights | Employers are required to consult with workers before introducing or updating algorithmic management systems. This enables meaningful input and safeguards democratic processes at work. Real case: In 2025, a Nanterre Works Council suspended an AI rollout due to a lack of consultation, setting a precedent for worker inclusion. |

| Limits on Intrusive Monitoring | Protect workers’ privacy, dignity, and fundamental rights | The directive bans invasive practices, including: Monitoring emotional or psychological states Neuro-surveillance or eavesdropping Tracking off-duty/private behavior Profiling based on union activity or rights. Inferring sensitive data (health, race, etc.) This is about drawing ethical boundaries in the workplace. |

| Mandatory Human Oversight | Prevent harm by ensuring accountability in AI-driven decisions | AI systems must not operate unchecked. A human must always oversee and be able to question, override, or stop any decision that could negatively impact a worker. This reinforces fairness and guards against automation errors or bias. |

The directive is still in motion. The Employment Committee is set to vote in December 2025, and if approved, the full Parliament would ask the European Commission to draft it into law. We could see a formal legislative proposal in 2026 or 2027.Will the final version look exactly like the draft? Probably not. But the heart of it is unlikely to change: a call for greater fairness, accountability, and humanity in algorithmic management.

Implications for HR

The EU AI Act already tells us which systems are high-risk. It sets the guardrails. But this proposed Algorithmic Management Directive goes deeper: into the workplace, into the relationships, and into the lives affected by AI decisions.

It doesn’t just regulate systems, it protects people. It asks: Are workers being treated fairly? Are they being informed? Are they being respected?

And those are the questions every HR leader should be asking too.

- Recruitment & Hiring: More than a checkbox

AI-driven hiring tools, from CV-sorting software to video interview analyzers, are now classified as “high-risk” under the EU AI Act. That means using them comes with serious legal and ethical obligations.

HR must ensure:

- Transparency: Candidates need to know when AI is involved in the process.

- Bias Mitigation: Algorithms must be trained on quality, representative data to avoid discrimination.

- Oversight: Automated decisions must be monitored, audited, and — if necessary — overridden by a human being.

- Performance Monitoring & Evaluation: Where trust can break or bloom

AI is increasingly used to track productivity, flag behavior, and assess worker performance. But under both the AI Act and the proposed Directive, this kind of surveillance is not business as usual.

The law now says:

- Monitoring tools must be proportional and respectful.

- Emotion tracking and neuro-surveillance? Banned.

- Workers must be consulted and provided with clear explanations for how their data is used.

- HR must take a leading role in human oversight, ensuring AI doesn’t silently punish, manipulate, or profile employees.

- Automated Decision-Making: Who’s really in charge?

From shift scheduling to contract renewals, more and more decisions are being made or influenced by AI. But under the proposed Algorithmic Management Directive, fully automated decisions affecting workers must be explainable, contestable, and (above all) humanly reviewable.

That means:

- Every HR department needs a clear protocol for how AI-influenced decisions are made.

- Workers must have a right to explanation and the ability to challenge outcomes.

- HR must act as a bridge between systems and people, advocating for transparency and fairness.

- Implications for HR Teams: Evolving roles, elevated standards

The combination of these two legislative forces reshapes what it means to be in HR:

| Old Role | New Role Under the AI Act & Directive |

| Recruiter or HR Manager | AI Compliance Leader |

| Employee Relations Specialist | Digital Rights Advocate |

| Process Optimizer | Ethical Technology Interpreter |

| Policy Enforcer | Cultural Trust Builder |

To meet these expectations, HR teams must:

- Collaborate with legal, IT, and ethics officers

- Audit current systems for risk exposure

- Update internal policies to reflect regulatory requirements

- Train staff on responsible AI use and digital rights

To meet these expectations, HR teams must:

- Collaborate with legal, IT, and ethics officers

- Audit current systems for risk exposure

- Update internal policies to reflect regulatory requirements

- Train staff on responsible AI use and digital rights

5. For Multinational Companies: One continent, many implications

- Regulations like the AI Act apply across borders, but national labor laws may differ.

- Works councils, trade unions, and employee committees may require different consultation mechanisms.

- Vendor management becomes crucial: third-party tools must also comply with EU laws.

HR leaders must take a cohesive yet localized approach, ensuring compliance while preserving organizational values.

Leading with heart in the age of algorithms

We are living through a transformation that’s bigger than just policy updates or software upgrades. This is a shift in how we define fairness, leadership, and trust in the modern workplace. Artificial intelligence is no longer a future issue, it’s already here shaping who gets hired, how we’re managed, and what we’re worth.

The EU AI Act and the proposed Algorithmic Management Directive are not just legal tools — they are ethical compasses. They ask every organization, every HR team, and every leader to answer one vital question:

Are we using technology to serve people — or to control them?

If you’re reading this and feeling overwhelmed, you’re not alone. This is complex. It’s evolving. And it requires boldness, care, and the right guidance.

That’s where we come in.

At theHRchapter, we help HR leaders and organizations navigate the intersection of people, policy, and technology with clarity, compassion, and compliance. Whether you need help auditing your systems, preparing for EU legislation, or building trust with your workforce in the digital age, we’re here for you.

Because when we lead with heart, even in the age of algorithms, everyone wins.

Need support or want to explore how we can help?

Contact us at theHRchapter and let’s shape the future of work, together.

Spread the Word!

Enjoyed what you’ve read? Help others discover it too! 📢 Share this article and let’s keep the discussion going.

Related Reads: Check out these other Articles!

Agentic AI and HR Ethics: Best practices and ensuring compliance for employees and candidates

Agentic AI and HR Ethics: Best practices and ensuring compliance for employees and candidates There’s…

2025: The Year HR Became Strategic – Key Lessons and Insights for HR Leaders

2025: The Year HR Became Strategic – Key Lessons and Insights for HR Leaders 2025…

Compliance Reset: 8 Key HR Reforms Dutch Employers Must Prepare for by 2026

Compliance Reset: 8 Key HR Reforms Dutch Employers Must Prepare for by 2026 By 2026,…